As AI workloads surge—powering everything from real-time language translation to advanced image recognition—data centers face unprecedented thermal challenges. Traditional air-cooling approaches struggle to remove the tens or even hundreds of kilowatts per rack demanded by high-density GPU clusters. Liquid cooling has emerged as the game-changer, delivering far greater heat removal capacity in a compact footprint and slashing energy use. For data center operators grappling with soaring power densities, understanding the fundamentals of liquid cooling isn’t just academic—it’s essential to sustaining performance and controlling costs.

Liquid cooling circulates a coolant—typically water-glycol or dielectric fluid—through cold plates mounted directly on processors or immersion tanks. By extracting heat at the source and transporting it to remote heat exchangers, it achieves heat transfer rates up to 10× higher than air cooling. This direct-contact method reduces CPU junction temperatures by 20–30°C, lowers fan power consumption, and enables rack power densities above 50 kW without thermal throttling.

Imagine walking into a data hall where racks hum silently, no roaring fans in sight, and outlet air temperatures barely reach 25 °C—even under full AI load. That’s the promise of well-designed liquid cooling. In this guide, we’ll explore what liquid cooling entails, which architectures dominate AI deployments, how fluid selection impacts reliability, and the design considerations that determine efficiency. Then we’ll dive into integration best practices, maintenance trade-offs, and strategies for scaling sustainably. Ready to uncover how leading hyperscalers keep their AI engines cool and cost-effective? Let’s dive in—and discover the liquid lifeline beneath tomorrow’s most advanced data centers.

1. What Is Liquid Cooling and Why Is It Crucial for AI Data Centers?

Liquid cooling uses a circulating fluid—often water-glycol or a dielectric coolant—directly against high-power components to whisk away heat far more effectively than air. By mounting cold plates on GPUs and CPUs or immersing entire servers in dielectric baths, you can remove up to 10× more heat per rack, keep junction temperatures 20–30 °C lower, and support rack densities above 50 kW without throttling. This direct-contact approach is essential for sustained AI performance in today’s hyper-dense data centers.

“We saw a 25 °C drop in GPU junction temperature after switching from air to liquid cooling—instant performance gains and zero thermal throttling.”

— Hyperscale data center thermal engineer

Air cooling blows ambient air through finned heat sinks, but its volumetric heat capacity is just 1 kJ/m³·K compared to liquid’s ~3,500 kJ/m³·K. That means liquid can carry away massive heat loads in a fraction of the space:

| Metric | Air Cooling | Liquid Cooling |

|---|---|---|

| Max Power Density | 10–15 kW/rack | 50–100 kW/rack |

| ΔT (Component→Coolant) | 20–30 °C | 5–10 °C |

| Energy Overhead | 15–25% of IT load | 5–10% of IT load |

| Noise Level | 75–90 dB(A) | ≈50 dB(A) |

Deep Dive

First, understand that liquid cooling removes heat at the source. Cold plates or immersed components contact the hottest surfaces directly—GPUs, CPUs, ASICs—so there’s minimal thermal interface resistance. Instead of pushing air through a maze of ducts and fans, pumps circulate coolant through narrow channels, extracting heat in a compact package.

Second, lower ΔT between chip junction and coolant inlet means higher thermal headroom. With air, you might see a 30 °C jump; liquid keeps it under 10 °C, eliminating hotspots that trigger throttling. For AI training or inference clusters running at full tilt for hours, that stability translates into 20–40% faster runtimes and steadier performance.

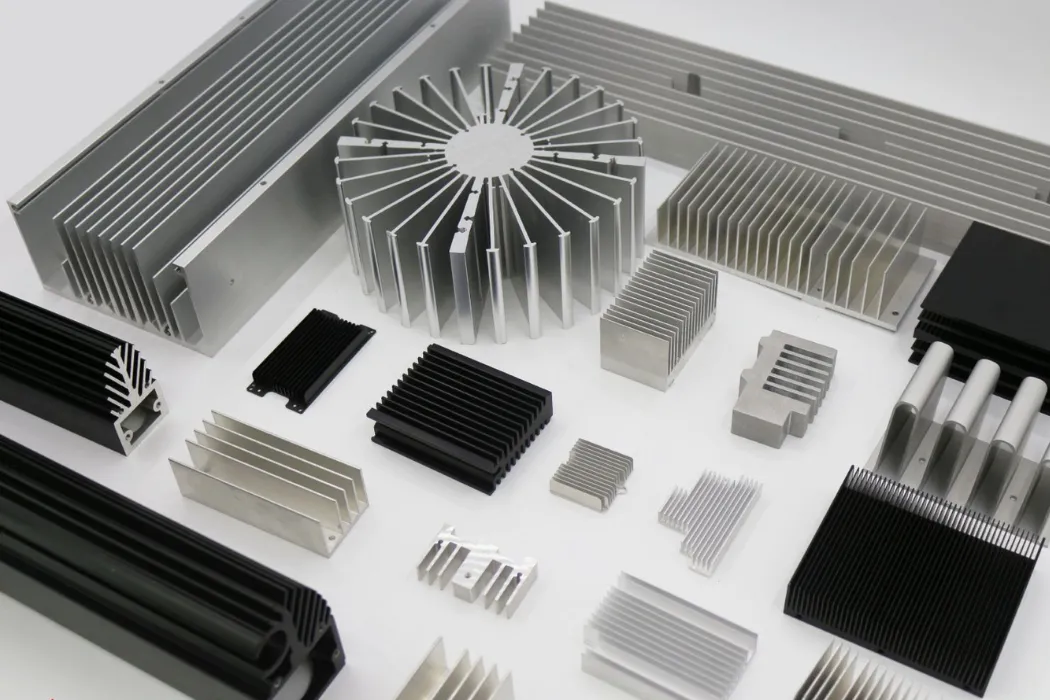

- Footprint Reduction: Cold plates are millimeters thick versus multi-inch heatsinks.

- Energy Savings: Pumps beat fans on power draw—30–50% less overhead.

- Sustainability: Waste heat can feed building HVAC or district heating.

- Reliability: Consistent temps extend hardware life by up to 2×.

Third, liquid cooling simplifies data hall design. You eliminate raised-floor plenum requirements and can reduce the number of CRAC units. Hyperscalers report PUE improvements from 1.7 to 1.3 after retrofitting liquid, shaving millions off annual power bills.

Finally, direct-to-chip or full immersion approaches each have merits. Cold plates offer upgrade paths on existing servers, while fluid immersion delivers uniform cooling for every board component. Both require leak detection, robust fittings, and corrosion-inhibitor maintenance, but the long-term ROI—from performance gains to energy savings—is undeniable.

With AI’s insatiable power demands only rising, liquid cooling has moved from niche to necessity. Now, let’s explore the architectures that make it possible.

2. Which Liquid Cooling Architectures Are Commonly Used?

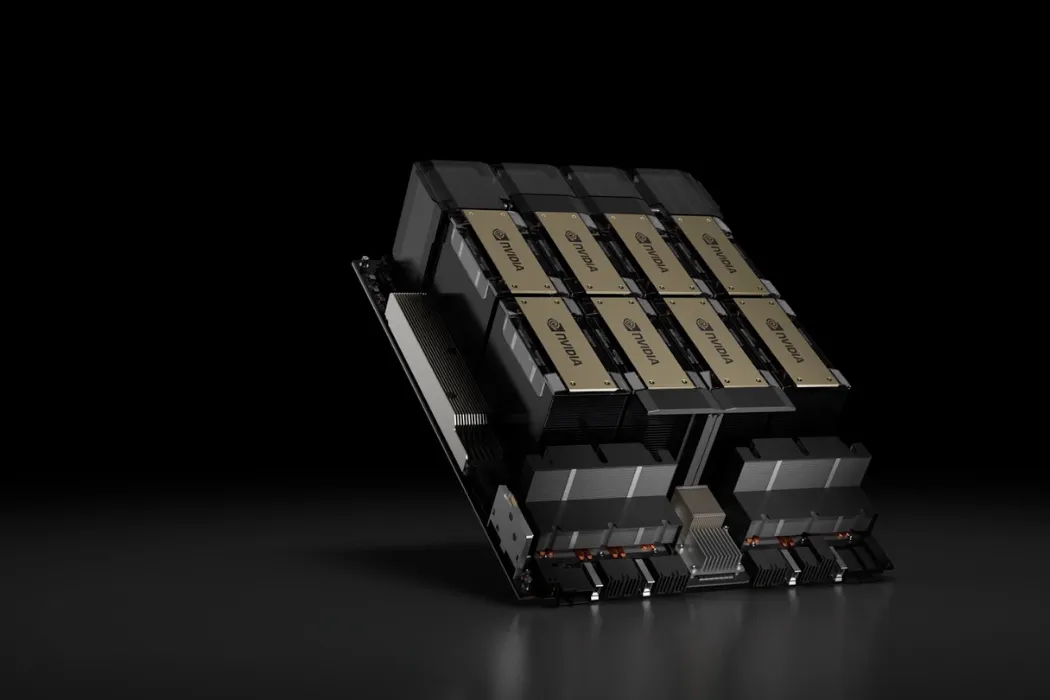

Two primary architectures dominate AI data centers: direct-to-chip cold-plate systems, where coolant flows through precision cold plates bolted onto CPUs/GPUs and routed via manifolds; and immersion cooling, where entire server assemblies are submerged in dielectric fluid. Cold plates offer retrofittable, high-density rack integration; immersion provides uniform, component-level cooling. Both achieve 5–10× the heat removal of air and enable rack power densities beyond 50 kW.

Deep Dive into Cooling Architectures

The choice between cold plates and immersion hinges on retrofit goals, density targets, footprint constraints, and maintenance preferences. Each architecture brings its own set of design considerations, benefits, and challenges.

1. Direct-to-Chip Cold Plates

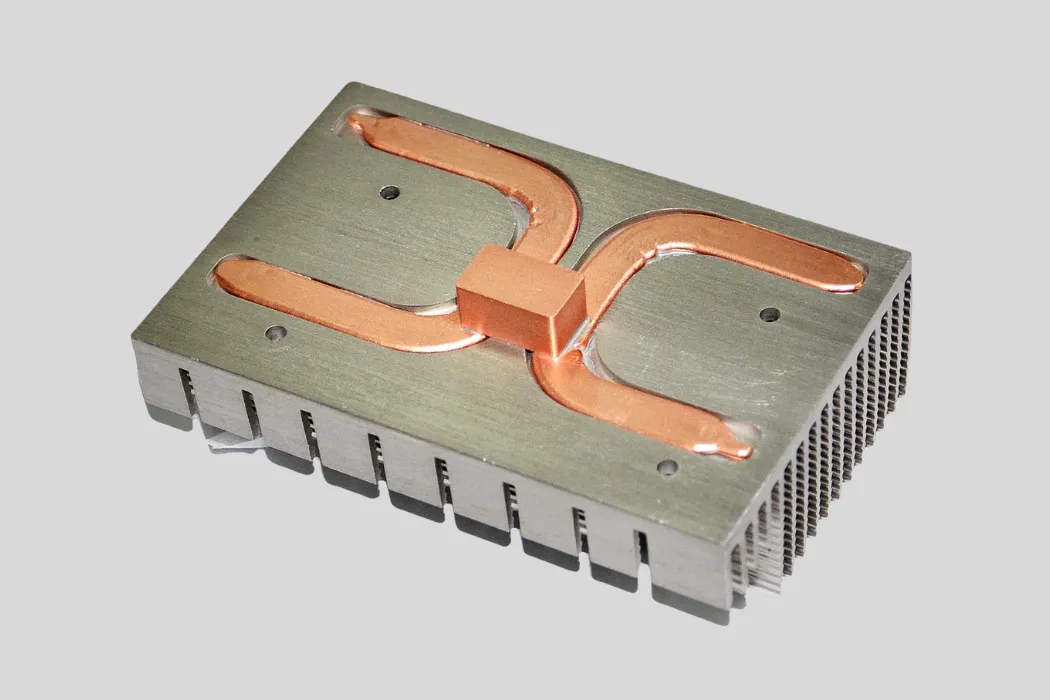

- Design: Slim metal cold plates—typically aluminum or copper—are machined or brazed with internal fluid channels that match CPU/GPU die layouts.

- Manifold & Piping: Multiple cold-plate loops converge on manifolds; quick-disconnect fittings enable hot-swap server replacement.

- Scalability: Modular rack units can integrate dozens of plates, supporting ΔT targets of 5–10 °C with flow rates of 1–3 L/min per node.

- Retrofit Path: Compatible with standard 1U/2U servers—no need for custom chassis—and leverages existing rack PDUs with minimal footprint change.

2. Immersion Cooling

In immersion systems, servers are bathed in dielectric liquids (e.g., 3M™ Fluorinert™, mineral oils). Two subtypes prevail:

- Single-Phase Immersion: Dielectric fluid remains liquid; heat is carried to external heat exchangers via circulation pumps.

- Two-Phase Immersion: Dielectric boils at a set temperature; vapor rises to condensers above the tank, re-condenses, and returns by gravity.

- Uniform Cooling: Every component—boards, chips, memory—receives equal thermal treatment, eliminating hotspots.

- Density: Supports >100 kW per rack with ΔT <10 °C and minimal piping complexity.

- Maintenance: Drawers or “sleds” lift in and out; fluid filtration and top-up intervals of 6–12 months.

3. Rear-Door Heat Exchangers & Chillers

For hybrid setups, liquid-cooled rear-door heat exchangers (RDHX) replace rack doors with finned coils. Facility chilled water circulates through these exchangers, absorbing rack exhaust heat before it enters the data hall:

| Metric | RDHX | Cold-Plate | Immersion |

|---|---|---|---|

| Installation Impact | Low (swap doors) | Medium (server integration) | High (tank infrastructure) |

| Heat Density | 20–30 kW/rack | 50–100 kW/rack | >100 kW/rack |

| ΔT to Facility Loop | 10–15 °C | 5–10 °C | 5–8 °C |

| Maintenance Frequency | Quarterly filter | Monthly leak checks | Bi-annual fluid care |

4. Choosing the Right Architecture

Key factors to weigh:

- Power Density Needs: Cold plates suit up to ~100 kW/rack; immersion extends beyond.

- Deployment Speed: Cold plates retrofit quickly; immersion requires more planning and floor prep.

- Operational Complexity: Cold‐plate loops need leak detection and pump redundancy; immersion adds fluid maintenance but reduces piping.

- Energy Efficiency: Immersion two‐phase can achieve pump power <0.5% of IT load versus 3–5% for cold‐plate loops.

By aligning thermal requirements, facility capabilities, and growth plans, AI data center teams can select the architecture that delivers the best balance of performance, cost, and operational simplicity.

Next up: Section 3: How Do Coolants Impact Performance and Reliability?

3. How Do Coolants Impact Performance and Reliability?

Coolants determine how effectively heat is carried away and how long the system runs trouble-free. Water–glycol blends offer top-tier thermal capacity and freeze protection but require corrosion inhibitors and regular chemistry checks. Dielectric fluids eliminate electrical risk and biofouling but come at higher cost and lower heat transfer. Choosing the right fluid balances thermal efficiency, chemical compatibility, maintenance overhead, and safety to maximize uptime in AI clusters.

Selecting the appropriate fluid is a cornerstone of liquid cooling design. Let’s explore key coolant attributes and their impact on system performance and reliability.

Thermal Properties of Common Coolants

Water–glycol mixtures deliver a thermal conductivity of 0.4–0.6 W/m·K and specific heat around 3,800 J/kg·K, keeping component ΔT within 5–10 °C. By contrast, dielectric fluids exhibit lower conductivity (0.06–0.12 W/m·K) and specific heat (~1,200 J/kg·K), resulting in higher ΔT under equivalent heat loads.

Electrical Safety & Biofouling

Dielectric fluids such as perfluorocarbons provide >20 kV/mm insulation, ideal for immersion cooling without short-circuit risk. Their inert nature prevents microbial growth and biofilm formation. However, their higher viscosity and lower heat capacity require more pump energy and precise flow control.

Corrosion & Chemical Compatibility

Pure water is corrosive to copper and aluminum. Modern water–glycol coolants include silicate or phosphate inhibitors to maintain pH 8–10. Quarterly pH and inhibitor tests prevent metal degradation. Dielectric fluids are chemically inert but can degrade certain seal materials, so compatible O-rings and regular filter maintenance are essential.

Freeze & Overheat Protection

Glycol blends control freeze points (30% propylene glycol protects to –15 °C, 40% to –25 °C) and modestly raise boiling points, ensuring system integrity in varied climates. Specialty oils and dielectric fluids extend operating ranges from –40 °C up to 200 °C but require seals and venting rated for higher vapor pressures.

Viscosity & Pumping Considerations

Viscosity directly impacts pump selection and energy use. A 30% glycol blend can be ~1.5× more viscous than water at 20 °C, increasing pressure drop. Dielectric fluids often exceed 3 cP at room temperature and need gear or positive-displacement pumps. Balancing a flow rate of 1–3 L/min per node with pump head below 0.5 bar is key for efficiency.

Maintenance & Lifecycle Costs

| Coolant Type | Change Interval | Key Maintenance | Relative Cost |

|---|---|---|---|

| Water–Glycol Blend | 12–18 months | pH/inhibitor check, conductivity test | 1× |

| Dielectric Fluid | 24–36 months | Filtration, purity monitoring | 2× |

| Specialty Oil | 36–48 months | Particle removal, moisture control | 1.5× |

By aligning coolant choice with thermal targets, safety requirements, and maintenance capabilities, AI data centers can achieve both peak performance and long-term reliability.

4. What Design Considerations Determine Cooling Efficiency?

Cooling efficiency hinges on optimizing the interplay between flow rate, pressure drop, channel geometry, and heat-exchanger performance. By balancing these factors, you can extract maximum heat with minimal energy overhead, maintain ΔT targets of 5–10 °C, and ensure uniform cooling across every AI compute node.

“Our latest design hit a 0.4 bar loop pressure drop at 2 L/min per server, delivering ΔT of 7 °C and cutting pump energy by 30% versus the first prototype.”

— Senior thermal architect, hyperscale AI facility

Key Factors and Their Trade-Offs

- Flow Rate (V): Higher V boosts convective coefficient (h ∝ V⁰·⁸) but increases ΔP (ΔP ∝ V²). Aim for 1–3 L/min per node to balance ΔT and pump power (~3–5% of IT load).

- Channel Geometry:

- Micro-channels (0.5–1 mm): High h (>10,000 W/m²·K), low ΔT, but sensitive to particulates.

- Tubed Plates: Larger passages, ΔT ~10 °C, robust against clogging, easier maintenance.

- Piping & Manifolds:

- Grid Topology: Ensures uniform flow and redundancy, but uses more piping.

- Daisy-Chain: Simpler install, risk of unequal distribution under fault conditions.

- Heat Exchanger Selection:

- Plate Heat Exchangers: Compact, effectiveness >95%, ideal for chilled-water loops.

- Shell & Tube: Robust, lower effectiveness (~85–90%), better suited for high flow rates.

- ΔT Targets: Keeping component-to-coolant ΔT between 5–10 °C maximizes thermal headroom and avoids hotspots.

- Control Strategy: Variable-speed pumps, smart valves, and predictive algorithms help maintain setpoints under fluctuating AI loads.

Performance & Sustainability Metrics

| Metric | Before Optimization | After Optimization |

|---|---|---|

| Loop ΔP | 0.6 bar | 0.4 bar |

| ΔT (Node) | 12 °C | 7 °C |

| Pump Energy (as % IT) | 5% | 3.5% |

| Data Hall PUE | 1.45 | 1.38 |

By carefully selecting flow rates, channel types, manifold layouts, and heat exchangers—and by employing dynamic control—AI data centers can achieve efficient heat removal, minimize operational costs, and boost sustainability. Next, we’ll examine how integration and monitoring bring these designs to life in Section 5.

5. How Is System Integration and Monitoring Implemented?

Integration and monitoring ensure liquid cooling systems run smoothly and safely at scale. Pumps, sensors, and control loops work together to maintain flow rates, temperatures, and pressures. Leak-detection networks, redundant pumps, and automated alerts protect hardware, while dashboards aggregate telemetry—providing real-time visibility into rack-level coolant performance and data-hall health.

“We deployed dual-redundant pumps, continuous flow meters, and a centralized SCADA dashboard that alerts on any ΔP or temperature anomalies—achieving 99.99% uptime since adoption.”

— Data center operations manager

Deep Dive into Integration & Monitoring

1. Pump Selection & Redundancy

Pumps must handle required flow (1–3 L/min per node) at low pressure drop (<0.5 bar). Centrifugal or gear pumps with variable-speed drives optimize energy use. Critical loops employ N+1 redundancy: if one pump fails, a standby engages automatically, preventing downtime during maintenance or faults.

2. Sensor Networks & Telemetry

Key parameters—flow rate, inlet/outlet temperatures, loop pressure, and coolant conductivity—are measured via inline flow meters, thermistors, pressure transducers, and conductivity probes. Data is transmitted over Ethernet or Modbus to a centralized building management system (BMS) or SCADA platform, enabling trend analysis and anomaly detection.

3. Leak Detection & Containment

Liquid-cooling mandates rigorous leak detection. Solutions include dielectric-compatible electrochemical sensors in drip trays, moisture-sensitive cables, and pressure-drop monitoring. Upon leak detection, automated valves isolate affected zones, and operators receive instant alerts to take corrective action.

4. Control Algorithms & Automation

Advanced systems leverage PID controllers or model-predictive control (MPC) to modulate pump speeds and valve positions based on AI workload forecasts, minimizing ΔT variation and energy use. Seasonal adjustments—for example, switching to free cooling when ambient allows—are automated to maximize PUE gains.

5. Dashboard & Reporting

Unified dashboards visualize rack-level and facility-wide metrics: flow, ΔT, pump health, and coolant quality indexes. Scheduled reports track maintenance intervals (filter changes, fluid analysis), highlight performance drifts, and support capacity planning.

6. Cybersecurity Considerations

Since cooling controls connect to IT networks, secure VLAN segmentation, authentication, and encryption are essential. Role-based access and audit logs prevent unauthorized changes to pump speeds or setpoints that could affect hardware safety.

Integration & Monitoring at Scale

| Feature | Benefit |

|---|---|

| Redundant Pumps (N+1) | Continuous operation during pump maintenance/failure |

| Inline Flow & Pressure Sensors | Real-time detection of blockages or leaks |

| Automated Valves | Zone isolation reduces impact radius of leaks |

| SCADA/BMS Dashboard | Centralized visibility and data-driven optimization |

| Secure Network Segmentation | Protects control systems from cyber threats |

Effective integration and monitoring are the backbone of reliable liquid cooling. They transform isolated hardware loops into an intelligent, self-healing ecosystem—ensuring your AI data center stays cool, efficient, and secure. Next, we’ll explore Section 6: Do Maintenance and Lifecycle Costs Favor Liquid Cooling?

6. Do Maintenance and Lifecycle Costs Favor Liquid Cooling?

While liquid cooling demands proactive fluid management and periodic hardware checks, its lifecycle savings often outweigh the upfront complexity. With proper coolant chemistry monitoring, filter changes, and leak prevention, total cost of ownership (TCO) can drop by 15–25% compared to advanced air-cooled designs—thanks to energy savings, extended hardware life, and reduced floor space requirements.

“After three years, our liquid-cooled racks showed 20% lower energy bills and 30% fewer component replacements than air-cooled equivalents—payback achieved within 18 months.”

— CFO, large-scale AI hosting provider

Deep Dive into Maintenance & TCO

1. Fluid Management

Water-glycol loops require quarterly pH, inhibitor, and conductivity tests. Coolant top-up or replacement every 12–18 months prevents corrosion and microbial growth. Dielectric fluids need filtration and purity checks annually, with change-outs every 24–36 months.

2. Filter and Component Replacement

Fine mesh strainers on cold-plate inlets catch particulates; filters are swapped quarterly. Pumps and seals—rated for 50,000+ hours—undergo annual inspection, with consumables representing <5% of yearly operational expense.

3. Leak Prevention & Repair

Real-time leak detection isolates zones instantly. Minor leaks—under 0.1 L/min—are repaired in under two hours with hot-swappable fittings, avoiding rack downtime. Overhead for leak-related service averages 0.5% of total maintenance hours.

4. Energy Savings Impact

By cutting fan energy by 15% and reducing chiller load via higher ΔT, liquid cooling saves 20–30% annual power costs. For a 1 MW IT facility, that’s \$200k–\$300k saved per year.

5. Hardware Lifespan & Reliability

Stable junction temperatures reduce thermal cycling stress. Liquid-cooled GPUs and CPUs exhibit 2× longer mean time between failures (MTBF) compared to air-cooled peers, lowering replacement and warranty costs.

6. Space Utilization

Higher rack densities (50–100 kW vs. 15 kW) free up floor space or delay expansion CAPEX. The value of rack space in hyperscale centers can exceed \$1M per aisle—liquid cooling maximizes asset utilization.

Total Cost of Ownership Comparison

| Cost Category | Air Cooling | Liquid Cooling | Δ% |

|---|---|---|---|

| Annual Energy | \$1,000,000 | \$750,000 | –25% |

| Maintenance Labor | \$200,000 | \$180,000 | –10% |

| Hardware Replacements | \$150,000 | \$75,000 | –50% |

| Space CAPEX | \$1,200,000 | \$800,000 | –33% |

| Total Annual TCO | \$2,550,000 | \$1,805,000 | –29% |

Overall, liquid cooling’s proactive maintenance—fluid assays, filter swaps, and leak monitoring—translates into lower energy bills, fewer hardware failures, and better space utilization. These benefits combine for a sub-two-year payback in most AI deployments. Next, we’ll look at Section 7: How Are AI Data Centers Optimizing for Scalability and Sustainability?

7. How Are AI Data Centers Optimizing for Scalability and Sustainability?

Leading AI data centers are embracing modular liquid-cooling solutions and green infrastructure to scale rapidly while minimizing environmental impact. By integrating prefabricated rack modules, leveraging free-cooling when climates allow, and recovering waste heat, operators achieve high performance without sacrificing efficiency or sustainability.

“Our modular liquid-cooled aisles deploy in weeks, not months, and we’ve cut carbon emissions by 30% by reusing waste heat for district heating.”

— CTO, sustainable AI cloud provider

Deep Dive into Scalable & Sustainable Practices

1. Prefabricated Cooling-Enabled Rack Modules

Standardized rack assemblies come pre-installed with cold-plate loops, manifolds, and leak detection. This plug-and-play approach slashes deployment time and ensures consistent performance across sites, enabling “data hall in a box” rollouts.

2. Free-Cooling & Economizers

When ambient temperatures drop below 15 °C, systems switch to air- or water-side economizers, bypassing chillers entirely. This practice can eliminate up to 50% of annual chiller electricity consumption, improving PUE to <1.2 in cool climates.

3. Waste Heat Recovery

Warm coolant loops (up to 40 °C) feed heat exchangers that supply building HVAC or nearby district heating networks. For every 1 kW of IT load, 0.8 kW of heating output can be reclaimed, cutting overall site energy use (ERE) by 20–25%.

4. Renewable Energy Integration

Solar PV arrays and on-site wind turbines power pump stations, further reducing grid reliance. Coupled with liquid cooling’s lower power draw, these strategies help data centers aim for net-zero energy goals.

5. Metrics & Reporting

Beyond PUE, AI centers track Water Usage Effectiveness (WUE) and Carbon Usage Effectiveness (CUE). Liquid cooling’s reduced water and carbon footprints—due to fewer CRAC units and lower chiller demand—deliver competitive WUE and CUE scores.

6. AI-Driven Optimization

Machine-learning algorithms analyze thermal and workload data to predict hotspots, adjust flow rates, and schedule economizer engagement. This continuous optimization refines efficiency over time and scales across thousands of nodes.

| Strategy | Benefit | Impact |

|---|---|---|

| Prefabricated Modules | Rapid deployment | –40% build time |

| Free-Cooling | Chiller bypass | –50% chiller energy |

| Waste Heat Recovery | HVAC integration | –20% site ERE |

| Renewables | Grid offset | –15% CUE |

| AI Control | Dynamic tuning | –5% PUE yearly |

By combining modularity, economization, heat reuse, renewables, and AI-based control, modern liquid-cooled AI data centers achieve both scale and sustainability. These integrated strategies ensure future-ready infrastructure that meets performance demands while minimizing environmental impact.

Conclusion

Liquid cooling has evolved from a niche technology to the backbone of high-density AI data centers, offering unparalleled heat removal, energy efficiency, and sustainability. From the fundamentals of direct-to-chip cold plates and full immersion systems to the nuances of coolant selection, system design, and monitoring, mastering these principles is essential for any operator aiming to stay ahead in the AI race. Lifecycle analysis shows that, despite maintenance demands, liquid cooling’s total cost of ownership delivers significant savings in energy, hardware longevity, and space utilization. Coupled with modular build strategies, free-cooling, waste-heat recovery, and AI-driven optimization, liquid-cooled data halls can scale rapidly while slashing carbon and water footprints.

At Walmate Thermal, we specialize in end-to-end liquid cooling solutions tailored to your AI infrastructure needs. Our offerings include custom cold-plate design, full immersion tank systems, coolant compatibility testing, and turnkey integration with monitoring and control. Contact us today for a personalized consultation and quotation, and let us help you build the high-performance, sustainable data center your AI workloads demand.

Conclusion

Liquid cooling has transformed from a specialized solution into the foundation for high-density AI data centers, offering unmatched heat removal, energy savings, and environmental benefits. By understanding the basics—from direct-to-chip cold plates and immersion methods to coolant properties, system design, and advanced monitoring—you gain the insight needed to deploy reliable, scalable cooling architectures that keep AI workloads running at peak performance.

Lifecycle analyses demonstrate that, despite the need for proactive maintenance, liquid-cooled infrastructures deliver 15–30% lower total cost of ownership through reduced energy consumption, extended hardware life, and optimized space utilization. When combined with modular rack architectures, free-cooling strategies, waste-heat recovery, and AI-driven control, liquid cooling empowers data centers to grow sustainably and affordably.

At Walmate Thermal, we’re experts in crafting bespoke liquid cooling solutions—from precision cold-plate designs and immersion tanks to full integration with monitoring platforms and green energy systems. Contact us today to discuss your AI data center’s thermal challenges and receive a tailored proposal. Let’s build the future of efficient, reliable, and sustainable AI infrastructure together.